- Details

The much-anticipated Bitcoin halving, scheduled for April 2024, is fast approaching, and the buzzing in the crypto market is getting louder. Alongside this event, the rise of Ordinals and the launch of the Runes Protocol promise to open a new niche within the Bitcoin ecosystem.

In this article, DWF Ventures explores the phenomenon of Ordinals, BRC-20, and the Runes Protocol at a closer glance, and give some implications on their future scenarios. Below, you can check out the brief snapshot of the Ordinals ecosystem we made, with crypto projects placed on a scale of volume and market cap:

Ordinals

Ordinals, introduced by Casey Rodarmor in January 2023 not without controversion, unlocked the ability to inscribe data directly onto individual satoshis, creating artifacts similar to NFTs on Bitcoin. This has since driven a wave of developments, including the emergence of BRC-20 tokens and the Runes Protocol.

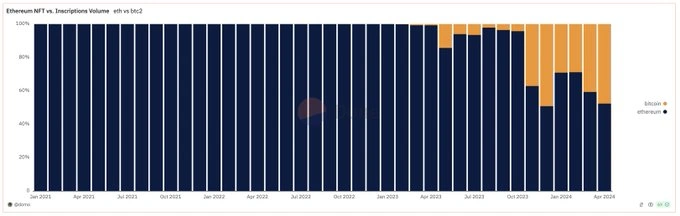

Ordinals have seen exponential growth, especially as the Bitcoin halving and the launch of the Runes Protocol draw near. In fact, trading volumes on Bitcoin have recently rivaled those on Ethereum, with OKX and MagicEden serving as primary marketplaces. Such a growth shows the increasing appeal of Bitcoin-based developments.

Popular NFT collections on Ordinals, such as Nodemonkes, Bitcoin Puppets, and Quantum Cats XYZ, have further amplified the ecosystem’s vibrancy. These collections have distributed valuable airdrops, including the likes of Runecoin and Pups, to their holders. This has not only rewarded the community but also fostered a loyal and active base of supporters, driving excitement around the Runes Protocol.

BRC-20

Building on Ordinals, BRC-20 acts as a fungible token standard that leverages this technology to create interchangeable tokens, similar to Ethereum’s ERC-20, by storing token data within the Bitcoin blockchain. In April 2024, Runes emerged to address some limitations of Ordinals and BRC-20.

Runes Protocol

Also developed by Casey Rodarmor, Runes acts as the fungible counterpart to Ordinals, reducing complexities with its UTXO-based model. It offers lower data and storage requirements compared to BRC-20 tokens, making it an attractive choice for developers and users alike. Pre-Rune projects have gained substantial attention, with Runecoin holders mining RSIC runes and pooling their positions to maximise rewards. The pre-market for RSIC on Whales Market is already live, creating buzz around the protocol even before its official launch.

A standout within the Runes ecosystem is Pups, the leading memecoin for Runes. Part of its supply was airdropped to Bitcoin Puppets and OPIUM holders, fostering a strong and engaged community from the outset. Its interoperability with Solana, enabled through Multibit, has further expanded its appeal, drawing attention from across the blockchain space.

Overall

As the Bitcoin halving approaches, many protocols are expected to launch or migrate to Runes. While concerns about fragmentation and a lack of unified standards persist, we believe that this lays a strong foundation for enhanced use cases and will see mass interest similar to the time when BRC-20s had launched.

As the crypto venture capital fund, we believe in supporting builders for Bitcoin and its growing ecosystem. If you are looking for a like-minded partner, reach out to us at DWF Ventures.

- Details

The Hong Kong Web3 Festival has come to an end, leaving attendees optimistic about the city’s growing role in the global crypto ecosystem. DWF Labs was proud to sponsor this crypto conference for the second consecutive year, witnessing firsthand its growth in scale and influence in the Web3 space, while also hosting a side event, DWF Labs Haus, for the crypto community.

Hong Kong Web3 Festival’s Short Summary

In 2024, Hong Kong Web3 Festival featured higher participation, influential speakers, and a robust lineup of discussions and side events, solidifying Hong Kong’s position as a burgeoning hub for Web3 innovation. The city’s streets are increasingly adorned with crypto-related advertisements, reflecting a growing cultural acceptance of blockchain and crypto technologies.

Regulators are also playing a significant role, with discussions around the approval of Bitcoin Spot ETFs, one of the most anticipated events for the crypto market in 2024, signaling a supportive stance toward Web3. This regulatory openness is fostering confidence among developers, investors, and entrepreneurs.

Compared to 2023, this festival saw a marked increase in participation, particularly from Western industry leaders. Figures like the Ethereum co-founder Vitalik Buterin joined the event, alongside a growing number of big ecosystems and projects showcasing their innovations in the main conference and side events.

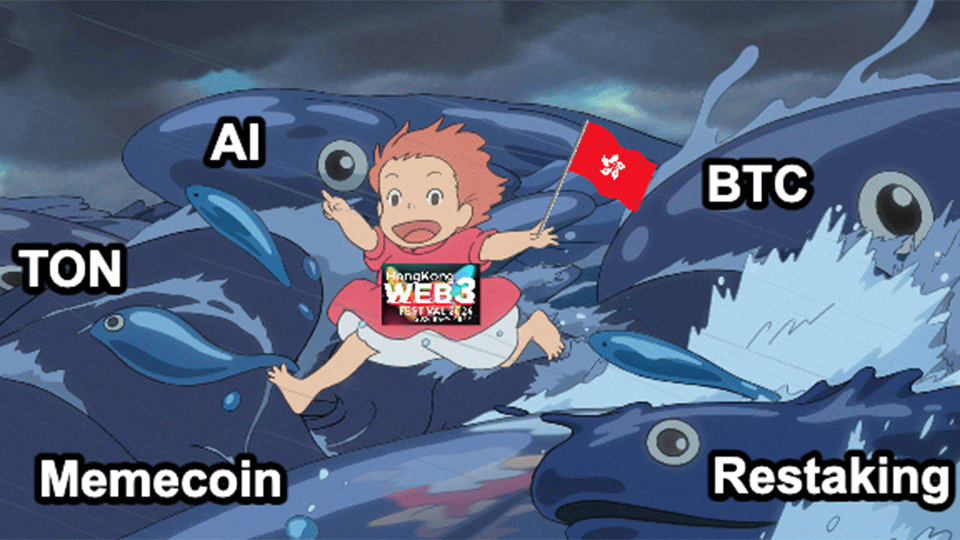

Hong Kong Web3 Festival was packed with engaging panels and discussions, with several key narratives taking center stage, including (but not limited to):

- Bitcoin (BTC) Ecosystem.

- Restaking.

- Artificial Intelligence (AI).

- TON Blockchain.

- Memecoins.

These themes highlight the broad and diverse areas of focus within the crypto industry, from infrastructure development to emerging applications.

Layer 2 Protocols for Bitcoin

BTC-related projects, including Web3 infrastructure and dapps, were particularly popular at the festival. This growth is being driven by an increase in attention from the community in regions like Hong Kong and other parts of Asia. This is natural considering that Hong Kong presents a robust fundraising landscape for BTC projects.

We also saw an influx of new projects building on Bitcoin, including Layer 2 solutions, DeFi, staking and restaking, and interoperability. In particular, the success of projects like Merlin Chain, Bitcoin’s Layer 2 (L2), is inspiring a new wave of blockchain builders to leverage the first cryptocurrency’s network as a base layer, exploring new avenues to enhance scalability and utility within the ecosystem. Read more here.

Liquid restaking

Another key theme that is worth mentioning is the emergence of liquid restaking, a DeFi technique that allows staked assets to be reused for additional purposes, as a trend in the blockchain space, with the recent mainnet launch of EigenLayer and EtherFi’s token launch underscoring its growing importance.

During the crypto conference, DWF Ventures’s Senior Associate, Fiona Claire Ma, shared insights into the Ethereum restaking landscape. Her presentation dived into the innovations and challenges shaping restaking, emphasising its potential to redefine how Ethereum’s ecosystem operates. In particular, she stressed that a stronger focus on restaking signals its increasing relevance in bolstering infrastructure and improving capital efficiency within DeFi.

Artificial Intelligence and Crypto

AI continues to disrupt multiple verticals, including decentralised applications, Decentralised Physical Infrastructure Networks (DePIN), along with consumer and gaming sectors. The intersection of AI and crypto opens new doors for innovation, scalability, and user engagement.

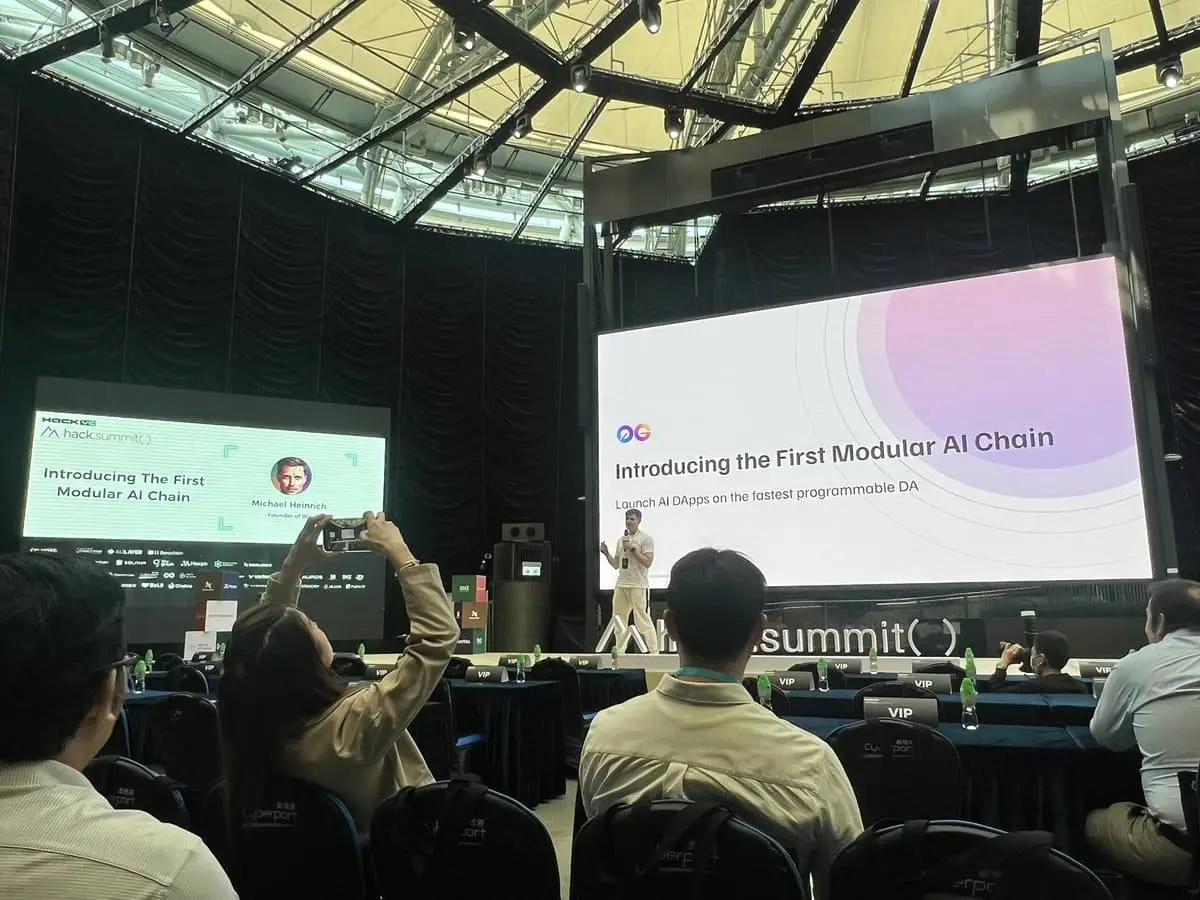

At the Hack Summit, which took place within the Hong Kong Web3 Festival, a company called ZeroGravity (0G) introduced the first modular AI chain, 0G AI, designed to deliver fast, scalable, and programmable solutions for AI-driven decentralised applications. This innovation highlights the growing demand for AI integration in Web3, with modularity and scalability being key to unlocking its full potential.

AI adoption is set to play a transformative role in reshaping blockchain applications, driving efficiency and enhancing user experiences across a wide range of industries.

The Rising Force of the TON Blockchain

TON has definitely been one to watch in this conference. This L1 network has been rapidly gaining momentum since its inception, and the ecosystem recently launched the first Asia-focused Accelerator program earlier in February 2024. This initiative highlights TON’s commitment to promoting Web3 and crypto in one of the world’s fastest-growing regions for blockchain technology.

We at DWF Ventures are optimistic about TON’s potential to drive massive adoption in this cycle. By providing support to projects building on the TON protocol, we aim to contribute to the growth of this promising ecosystem. TON’s integration with Telegram, its ever-expanding developer community, and strategic programs like the Accelerator signal a bright future for this blockchain.

Overall

It is clear to see that the Hong Kong Web3 Festival has reinforced the city’s position as an up and coming crypto hub, with its supportive regulatory environment, strong community engagement, and the growing interest of global players.

With top narratives like Bitcoin, Restaking, and AI shaping ongoing discussions, the festival provided a glimpse into the future of blockchain innovation.

Suffice to say, we’re excited about the opportunities Hong Kong offers and, being a crypto venture capital firm, remain committed to supporting the builders in this space. Working on a project? Connect with DWF Ventures to discuss partnership and support opportunities.

- Details

Memecoins have traditionally been viewed by crypto venture capital as speculative assets with little fundamental value. However, the market has demonstrated their undeniable mindshare power, capturing significant attention and engagement from retail participants. As the crypto industry enters a new cycle, memecoins are poised to evolve into a core GTM strategy for ecosystems and projects seeking to rapidly onboard users and drive engagement. DWF Ventures explains this phenomenon in a closer perspective.

The Current Memecoin Market Landscape

As of March 2, 2024, the total market capitalisation of memecoins has exceeded $60 billion, with a daily trading volume surpassing $11 billion, according to CoinMarketCap. The explosive growth in the number of memecoins has captured significant interest from the crypto community, with new tokens emerging rapidly across different blockchain ecosystems. This exponential growth indicates that memecoins are becoming a crucial component of the crypto landscape, with increasing influence over market sentiment and user engagement.

Memecoins as a GTM Strategy

Memecoins are no longer just speculative instruments; they are evolving into powerful marketing tools for various verticals within the crypto industry. In the current cycle, memecoins are proving to be highly effective in driving engagement, liquidity, and adoption across:

- Infrastructure ecosystems (L1 and L2 blockchains).

- Consumer crypto and gaming (GameFi) sectors.

- New projects initiated by memecoin communities.

Memecoins in Infrastructure Ecosystems

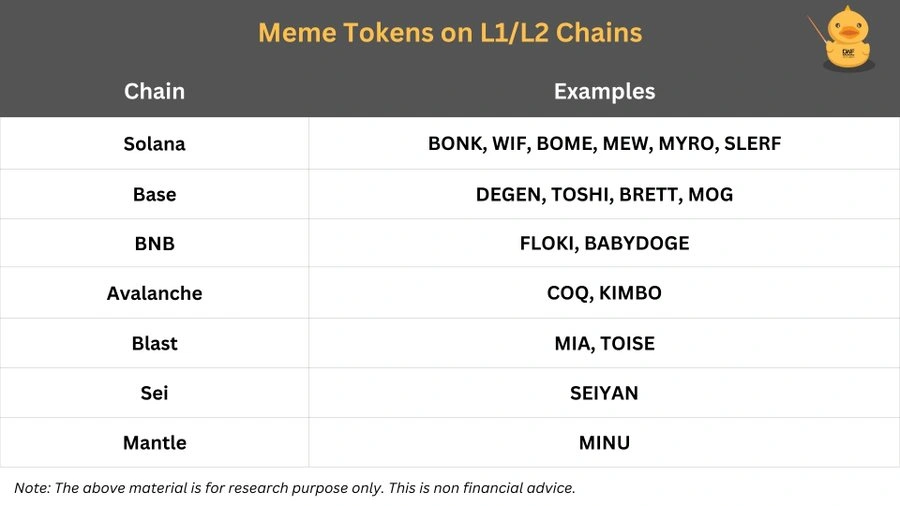

Memecoins are emerging as a strategic user acquisition channel for Layer 1 (L1) and Layer 2 (L2) ecosystems, serving as a vehicle to attract retail interest and drive traffic to blockchain networks. Prominent ecosystems have adopted memecoins as a tool to foster community engagement and brand recognition. Notable examples include:

- Solana (BONK): a community-driven token that has played a pivotal role in boosting Solana's visibility and retail adoption.

- Base (DEGEN): a memecoin that has rapidly gained traction, enhancing Base’s on-chain activity.

- BNB Chain (FLOKI): leveraging meme culture to onboard users and create a vibrant ecosystem.

The success of these tokens highlights how memecoins can reflect the popularity and engagement of an ecosystem, serving as a barometer for its overall health and community involvement. Solana, for example, has seen massive growth in public attention with the success of multiple memecoins, including BONK, WIF, and BOME, driving substantial network activity and liquidity.

Memecoins in Gaming and Consumer Applications

In a recent post, Ethereum co-founder Vitalik Buterin highlighted the importance of “positive-sum” value for memecoins, advocating for their integration into engaging applications rather than existing as purely speculative assets. He suggested leveraging memecoins to power play-to-earn (P2E) models and innovative consumer protocols that blend financial incentives with entertainment value.

While the previous crypto cycle saw the success of P2E projects like Axie Infinity, retail investors in this cycle seem to be more drawn to memecoins with built-in entertainment and community appeal. This shift presents an opportunity for gaming and consumer-focused projects to incorporate meme elements into their protocols, fostering both user engagement and financial incentives. By combining viral meme culture with rewarding gaming experiences, projects can tap into broader audiences and drive organic adoption.

New Projects Emerging from Memecoin Communities

The influence of memecoins has extended beyond mere speculation, as many teams behind popular tokens are now leveraging their established communities to launch new utility-focused projects. Examples include:

- ShibariumNet (SHIB): a Layer 2 solution built by the Shiba Inu team to enhance scalability and token utility.

- TokenFi (FLOKI): an ecosystem expansion aimed at offering DeFi and tokenisation solutions.

- BonkBot (BONK): an automated trading tool tailored for the Solana ecosystem.

These initiatives are designed to align with the original values of their respective memecoins while offering new utility, ensuring that existing communities remain engaged and incentivised. For instance, the Shiba Inu ecosystem has expanded to include projects like ShibaSwapDEX, providing token utilities such as payments and staking opportunities for SHIB holders. This symbiotic approach creates a win-win situation, where the memecoin accrues real token value while the new ecosystem projects benefit from a dedicated user base and faster adoption.

Overall

Memecoins have evolved beyond mere speculative instruments to become a powerful GTM strategy for crypto projects looking to build communities, attract liquidity, and drive awareness. Their ability to engage retail users, generate viral appeal, and foster loyalty makes them a valuable marketing tool for infrastructure, gaming, and consumer-focused ecosystems.

At DWF Ventures, we remain committed to supporting and investing in promising projects that leverage memecoins to onboard massive communities and create sustainable ecosystems. As the space continues to mature, we anticipate even greater innovations and deeper integration of memecoins into the broader crypto economy. If you are building in the memecoin sector, contact DWF Ventures to discusss potential collaboration.

- Details

March 2024 has been an exhilarating month for the crypto market, with major assets like Bitcoin (BTC) reaching all-time highs, and Ethereum (ETH) and Solana (SOL) hitting yearly peaks. This surge has ignited excitement once more in the market, trickling down to memecoins and shaping new narratives. Let’s explore the trends that were propelling the market forward during the month.

Market Highlights: BTC and Beyond

The crypto market soared in March, with BTC breaking all-time highs and ETH and SOL following closely with significant yearly peaks. This bullish momentum has not only fueled optimism among traders but also created a ripple effect across the broader crypto ecosystem.

The Month of Memecoins

While 2024’s February narratives of restaking and Bitcoin Layer 2 solutions (L2s) remain relevant, March has introduced new contenders. The momentum surrounding presale memecoins and the staggering amount of funds raised on Solana underscore the shifting dynamics of the crypto market.

Memecoins have been a standout performer this month, benefiting from the trickle-down effect of major tokens’ success. While established names like DOGE, PEPE, BONK, and WIF have enjoyed strong performances, the real buzz was around memecoins leading the presale meta, such as BOME and SLERF.

Created by Darkfarms, BOME skyrocketed to a $1 billion market cap in just three days, spurred by an unprecedented listing on Binance. This explosive growth not only showcased the potential of memecoins but also triggered a wave of presale activity, particularly on Solana.

In March alone, over 655,000 SOL (valued at $122.5 million) was raised across 27 presale launches, highlighting the significant capital flowing into this niche.

As EigenLayer’s mainnet launch and the upcoming Bitcoin halving approach, these narratives will likely gain even more traction. Meanwhile, the success of memecoins highlights the importance of community engagement and innovative GTM strategies in driving adoption and growth.

In the coming months, memecoins are likely to become a key go-to-market (GTM) strategy for new blockchains and crypto projects. Their ability to generate attention, foster community engagement, and drive adoption makes them a valuable asset in a rapidly evolving market landscape.

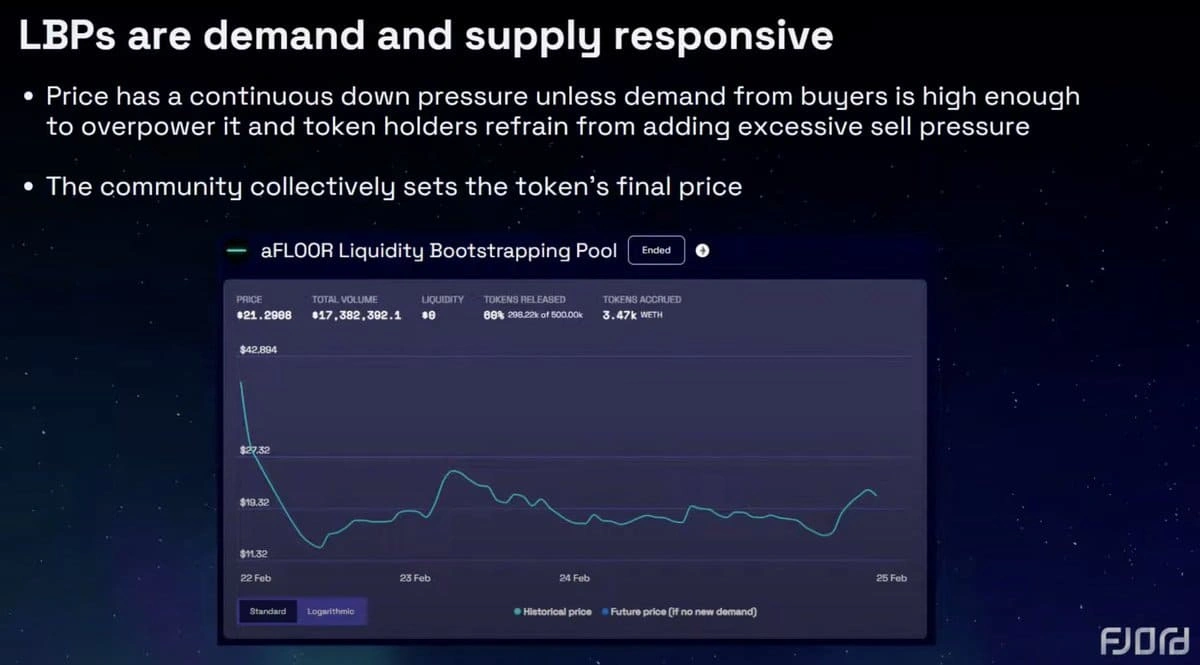

LBPs: A Fairer Token Distribution Model

Liquidity Bootstrapping Pools (LBPs) have gained momentum this month, offering a fairer alternative to traditional ICOs. Unlike ICOs, LBPs prevent whales and snipers from capturing large portions of the supply through their dynamic pricing mechanisms.

Key highlights include:

- High-profile LBPs from protocols like Fjord Foundry, MobyHQ, and hiBazaar.

- Solana’s entry into the LBP space via 1intro.

- Successful launches like DEAI and ARCX, which raised over $5 million each.

LBPs are expected to gain further traction, providing equitable token distribution and fostering community participation.

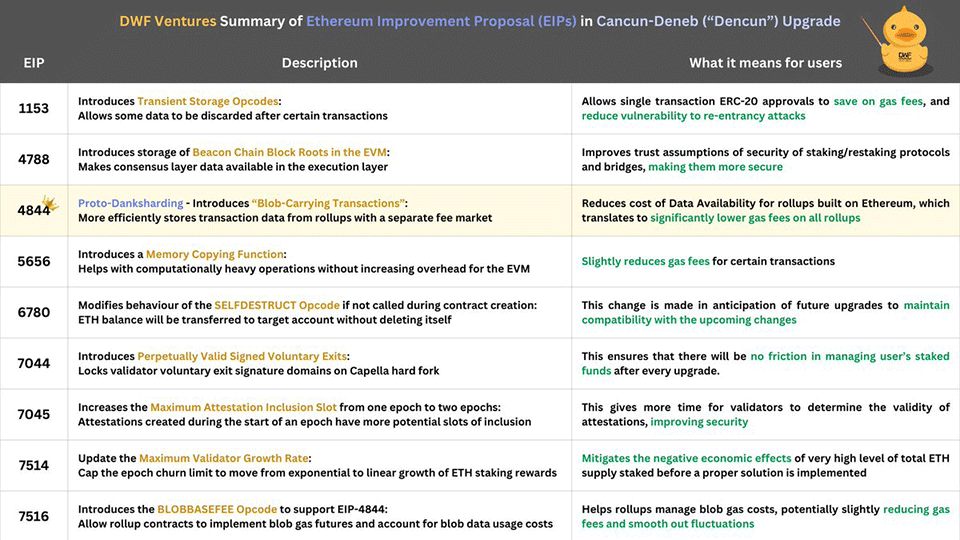

Ethereum’s Dencun Upgrade: Lowering L2 Fees

The much-anticipated Dencun Upgrade went live this month, introducing “blobs” to Ethereum Layer 2s (L2s), significantly reducing transaction fees. This upgrade addresses soaring Ethereum gas fees, which had also driven up L2 gas costs.

Median transaction fees on L2s dropped by 50%-99%, according to preliminary findings:

- Arbitrum: $0.39 → $0.14

- Base: $0.37 → $0.03

- Optimism: $0.32 → $0.01

The Dencun upgrade shows Ethereum’s commitment to scalability and affordability, making L2s more accessible for users and developers.

AI x Crypto: Nvidia’s GTC Conference

The Nvidia GTC Conference further fueled excitement around the convergence of AI and crypto, showcasing groundbreaking possibilities for the future. A key highlight was a panel featuring Near Protocol’s founder, Illia Polosukhin, who discussed how AI models could change creative industries by improving greater accessibility for creators through Near Protocol.

This growing attention shows the potential of integrating AI with blockchain technology, signaling new opportunities for collaboration.

Fantom’s Sonic Upgrade: A Game-Changer for DeFi and Gaming

The upcoming Sonic Upgrade from Fantom Foundation is attracting much excitement from the community. Set to go live in Q2 2024, this upgrade promises great performance improvements:

- 10,000 transactions per second (TPS).

- 2-second time-to-finality (TTF).

- Lower storage costs.

These advancements can turn Fantom into a blockchain leader in supporting major DeFi and GameFi use cases, making it a strong contender in the evolving blockchain ecosystem.

Yield Products: Redefining DeFi

DeFi Yield products have taken center stage, with projects offering innovative solutions to maximize returns for users.

Ethena Labs launched with an impressive 27% APY on its USDe stablecoin, attracting over $1 billion in TVL within a month. This surge in demand has also boosted platforms like Pendle Finance, which facilitate trading yield for users seeking more sophisticated strategies.

As projects continue to launch airdrop campaigns, products aggregating liquidity for yields and points are gaining traction. Platforms like 0xFluid, EtherFi, and Airpuff enable users to leverage collateral while offering multipliers on Eigenlayer and LRT points, enhancing overall yield potential.

Conclusion

March 2024 has been a month of records, innovations, and shifting narratives. From BTC’s historic highs to the rise of memecoins, LBPs, and Ethereum’s Dencun upgrade, the crypto space continues to evolve. As we head into April, the market remains optimistic.

One key event on the horizon is the much-anticipated Bitcoin halving, expected to drive liquidity and further market activity. In addition, EVM-compatible blockchains like Blast, Base, and Fantom are poised for significant growth, bolstered by strong ecosystems and catalysts like the Sonic upgrade.

We are excited to support the builders and communities driving the crypto market further. If you are searching for support from a cryto venture capital firm, don't hesitate to contact DWF Ventures.

- Details

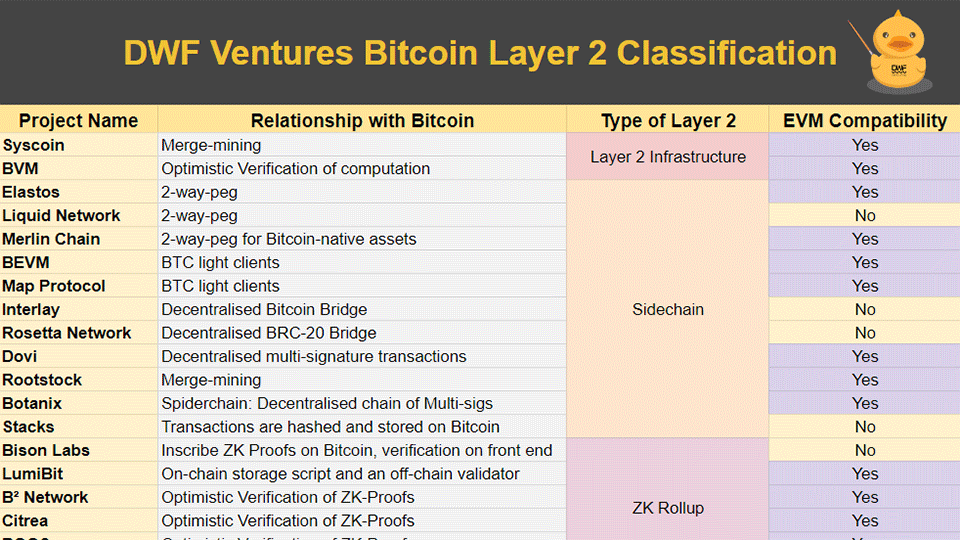

We recently compiled a list of Bitcoin Layer 2s, but how do they differ under the hood?

In this thread, we will dive deeper into how we classify these Layer 2s, and walk through Bitcoin's scalability journey to highlight how they aim to leverage Bitcoin as a base layer.

- Details

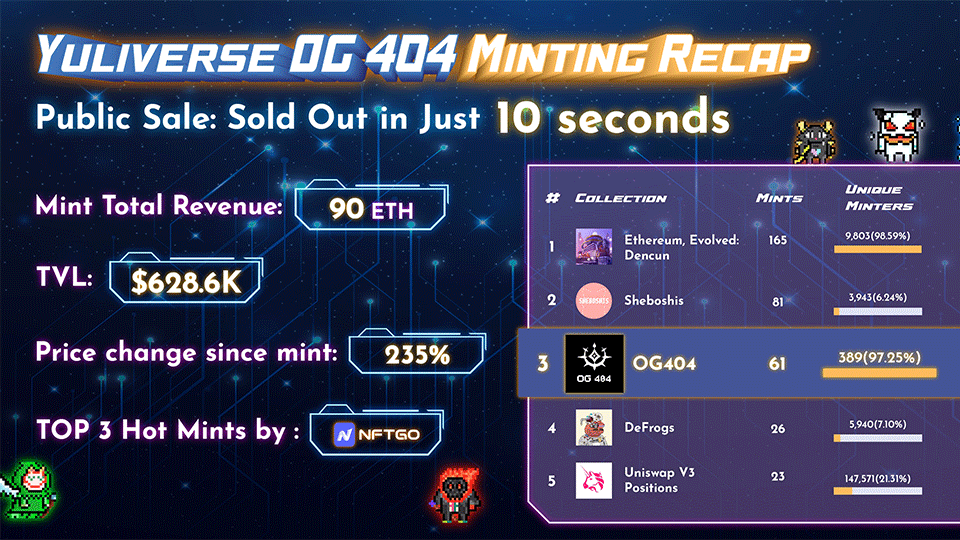

The launch of OG404, Yuliverse’s flagship NFT collection, marks a significant milestone in the GameFi space. With a limited supply of 1,000 NFTs, OG404 sold out in just 10 seconds, earning a spot among NFTGo’s Top 3 hot mints. Built on the experimental Ethereum’s DN404 token standard, this collection is reshaping liquidity and accessibility in the NFT and blockchain gaming ecosystem.

Here’s everything you need to know about Yuliverse and their new NFT collection OG404.

What is Yuliverse?

Yuliverse is a Web3 location-based game that blends blockchain technology with real-world exploration, much like a blockchain version of Pokémon GO. This GameFi project has demonstrated impressive growth and community engagement, securing its position as a leader in blockchain gaming:

- 140,000 daily active wallet addresses (DAU).

- Ranked among the Top 3 blockchain games in Japan.

- Achieved the third place for unique active wallets (UAW) on Polygon.

- The first place for NFT trading volume on BNB Chain in 2023, with over 106,000 BNB traded.

- Integration with major crypto trading and management platforms, including the OKX Wallet.

OG404 NFT Collection

The OG404 NFT collection was designed to reward Yuliverse’s early supporters. With unique utilities and liquidity features, OG404 exemplifies the next generation of NFTs.

Utilities of OG404

- Trading Liquidity: Enhanced market accessibility.

- ART Airdrops: Exclusive token rewards for holders.

- In-Game Benefits: Extra perks within the Yuliverse ecosystem.

- Staking Opportunities: Additional rewards that can be earned by staking OG404.

Built on the DN404 token standard, OG404 takes crypto liquidity and utility to the next level.

How DN404 Transforms NFT Transactions

- Fractionalised Trading: Enables users to tokenise and trade fractions (part) of a single NFT, significantly increasing its liquidity.

- Gas Efficiency: Compared to its predecessor ERC404, DN404 reduces transaction costs, making it more user-friendly.

- Dual Contracts: DN404 uses two contracts—an ERC20 base contract and an ERC721 mirror contract—to improve efficiency and minimise vulnerabilities.

DN404 vs. ERC404: Key Differences

While both DN404 and ERC404 innovate by combining ERC20 and ERC721 elements, there are notable distinctions. Firstly, ERC404 uses a single contract that interacts with both standards, whereas DN404 employs two separate contracts for better performance. Secondly, DN404 claims to offer greater efficiency and lower transaction costs, making it ideal for high-volume trading.

Thanks to the DN404 standard, OG404 can be traded directly on platforms like Uniswap. Trading less than 1 OG404 token yields OG404 without the NFT. Swaps of a complete OG404 token provides both OG404 and the NFT itself.

This new model significantly improves liquidity and accessibility, making OG404 one of the most versatile NFT collections in the market.

The development of OG404 was powered by a strategic partnership with AsterixLabs, leveraging the DN404 protocol to unlock new possibilities in GameFi. DWF Ventures, a key crypto venture investor in Yuliverse, has expressed enthusiasm about supporting the project’s growth and innovation.

Conclusion

Yuliverse’s OG404 NFT collection and the innovative DN404 token standard are setting a new benchmark for liquidity, accessibility, and utility in GameFi. With its rapid sellout and groundbreaking features, OG404 reflects the potential of NFTs to evolve into mainstream financial and gaming assets.

As the GameFi space continues to grow, Yuliverse is well-positioned to lead the charge with novel solutions that benefit gamers, developers, and investors alike.

As a crypto venture capital fund, we are always looking to partner with promising Web3 founders and projects. Keen to connect? You can contant DWF Ventures directly.

- Details

With the highly anticipated NVIDIA GTC 2024 Conference approaching, interest in the convergence of artificial intelligence (AI) and cryptocurrency has surged. This sector, often referred to as AI x Crypto, is rapidly evolving, driven by advancements in blockchain technology and AI capabilities.

As we look ahead to the conference, several significant developments have taken centre stage, highlighting the growing momentum of decentralised AI solutions within the crypto ecosystem. DWF Ventures breaks down the latest trends in AI x Crypto.

AI x Crypto Projects Join NVIDIA Inception Program

One of the most notable recent developments in the AI x Crypto space is the inclusion of several blockchain-powered AI projects in the prestigious NVIDIA Inception Program, an initiative that supports startups by providing access to resources, technical expertise, and market exposure. Projects such as AIOZ Network, Aethir Cloud, MyShell AI, and Virtuals.io have joined the programme, reflecting the increasing recognition of blockchain-based AI solutions by industry leaders.

This partnership is expected to significantly bolster innovation within the sector, enhancing the capabilities of decentralised AI networks by leveraging NNVIDIA’s cutting-edge hardware and AI research. As these projects continue to expand, they will likely attract further investment and collaboration, accelerating the adoption of AI-driven blockchain applications.

Growing Demand for Decentralised Computing Resources

As decentralised AI projects scale, their demand for computing power is growing exponentially. Despite their decentralised ethos, many AI x Crypto projects still rely on centralised cloud providers due to the lack of decentralised, reliable, and cost-effective alternatives. However, this reliance may shift with projects like Fetch.ai, which is investing heavily in NVIDIA GPUs to create a decentralised computing infrastructure.

Additionally, Fetch.ai's native FET token introduces a novel utility mechanism, enabling users to stake tokens in exchange for compute credits. This staking model aligns network incentives and provides a sustainable means of accessing decentralised GPU resources, driving wider adoption and reducing dependence on traditional cloud services.

Token Incentives for GPU Networks

In the AI x Crypto space, GPU networks are emerging as a crucial infrastructure layer. Platforms such as io.net and Exabits use blockchain-based incentive models to encourage the provision of GPU resources. These projects leverage native tokens to align interests between compute providers and users, ensuring a more equitable and efficient ecosystem for AI computations.

By offering token-based rewards, these networks aim to scale decentralised GPU availability while maintaining an economic model that sustains long-term participation from both retail and institutional users. This decentralised approach could play a pivotal role in democratising access to AI computation resources, fostering greater inclusivity in the AI development landscape.

Bittensor’s Expanding Role in AI Infrastructure

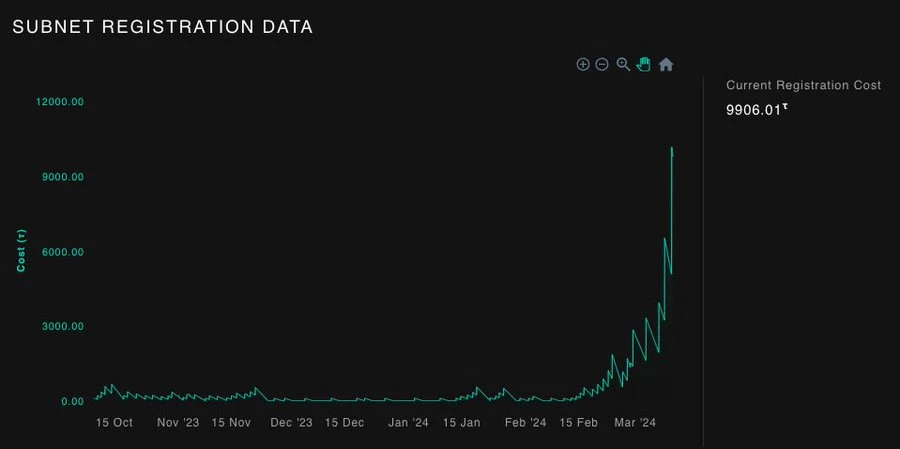

Among decentralised AI networks, Bittensor has gained significant traction as a leading infrastructure provider. Bittensor's architecture consists of subnets, each designed to perform specialised AI and machine learning (ML) tasks, such as data processing, model training, and inference. However, the cost of creating a subnet has risen sharply, currently priced at approximately 9,900 TAO (~$6.9 million).

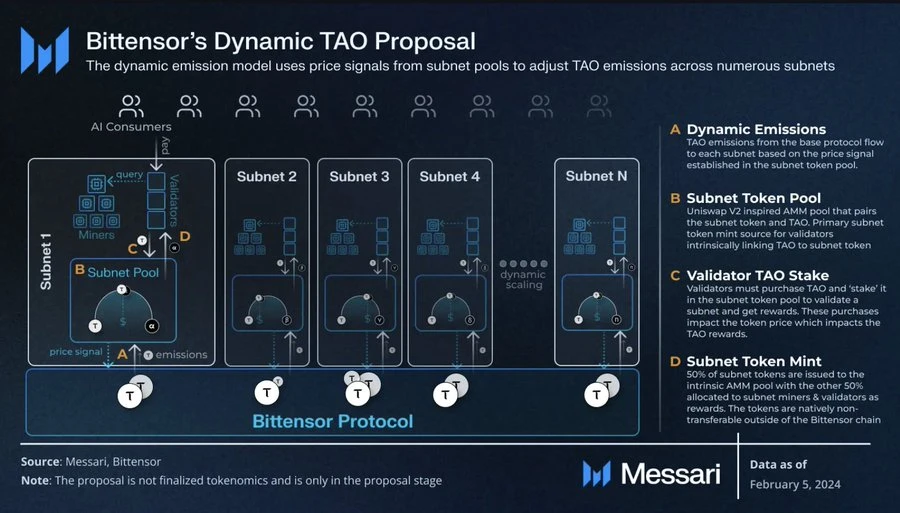

In response to this rising cost, discussions are underway within the community to increase the number of subnets through a governance vote. Additionally, the introduction of Dynamic TAO, expected in Q2 2024, aims to enhance decentralisation by adjusting emissions based on demand for subnet tokens. This mechanism is designed to incentivise higher-quality contributions and promote a more decentralised, meritocratic system.

Recent Developments in Bittensor Subnets

Several developments have taken place within Bittensor’s subnet ecosystem, highlighting its growing utility and adoption:

- Akash Network (Subnet 27): Provides affordable and accessible GPU resources for AI applications, lowering the barrier to entry for smaller AI projects.

- Open Kaito by Kaito AI (Subnet 5): A fully composable decentralised search layer, offering AI-driven search functionalities that empower users to access blockchain data more efficiently.

These advancements underline the adaptability and expanding ecosystem of Bittensor, positioning it as a key player in decentralised AI infrastructure.

NEAR Protocol’s Growing AI Involvement

NEAR Protocol has also been making strides in the AI x Crypto space, gaining attention ahead of GTC 2024. The protocol’s founder is set to be one of the key panelists at the NVIDIA conference, further cementing NEAR's commitment to AI integration within blockchain technology.

NEAR’s involvement in AI was also a major topic at ETHDenver, where discussions focused on the potential for AI-driven applications to improve blockchain scalability, security, and user experience. The growing enthusiasm around NEAR’s AI initiatives has sparked speculation about future collaborations and technological breakthroughs within its ecosystem.

Overall

With the NVIDIA GTC 2024 Conference poised to highlight AI advancements, the AI x Crypto sector is experiencing unprecedented attention. From decentralised GPU networks to innovative subnet architectures, blockchain-based AI projects are pushing boundaries and redefining scalability solutions. As industry players continue to refine their approaches and introduce governance enhancements, the potential for AI and crypto to intersect in meaningful ways remains vast and promising.

We are committed to supporting projects working on AI x Crypto solutions. If you are interested in partnering with a crypto venture capital fund, don’t hesitate to contact DWF Ventures.